2022-01-31 Formulation

Contents

2022-01-31 Formulation¶

Wednesday (Feb 2) we’ll be back in person (if you want to be) in FLMG 157.

Last time¶

Derive Newton’s method via fixed point convergence theory

Newton methods in computing culture

Today¶

Breaking Newton’s method

Exploration

Multiple roots

Conditioning of the rootfinding problem

Forward and backward stability

using Plots

default(linewidth=4, legendfontsize=12)

function newton_hist(f, fp, x0; tol=1e-12)

x = x0

hist = []

for k in 1:100 # max number of iterations

fx = f(x)

fpx = fp(x)

push!(hist, [x fx fpx])

if abs(fx) < tol

return vcat(hist...)

end

x = x - fx / fpx

end

end

fp (generic function with 1 method)

Formulations are not unique (functions)¶

If \(x = g(x)\) then

g(x) = cos(x)

gp(x) = -sin(x)

h(x) = -1 / (gp(x) - 1)

g3(x) = x + h(x) * (g(x) - x)

plot([x-> x, cos, g3], ylims=(-5, 5))

We don’t know \(g'(x_*)\) in advance because we don’t know \(x_*\) yet.

This method converges very fast

We actually just derived Newton’s method.

Newton’s method by fixed point convergence¶

A rootfinding problem \(f(x) = 0\) can be converted to a fixed point problem

\[x = x + f(x) =: g(x)\]but there is no guarantee that \(g'(x_*) = 1 + f'(x_*)\) will have magnitude less than 1.Problem-specific algebraic manipulation can be used to make \(|g'(x_*)|\) small.

\(x = x + h(x) f(x)\) is also a valid formulation for any \(h(x)\) bounded away from \(0\).

Can we choose \(h(x)\) such that

\[ g'(x) = 1 + h'(x) f(x) + h(x) f'(x) = 0\]when \(f(x) = 0\)?

In other words,

Quadratic convergence!¶

What does it mean that \(g'(x_*) = 0\)?

It turns out that Newton’s method has locally q-quadratic convergence to simple roots,

\[\lim_{k \to \infty} \frac{|e_{k+1}|}{|e_k|^2} < \infty.\]“The number of correct digits doubles each iteration.”

Now that we know how to make a good guess accurate, the effort lies in getting a good guess.

Rootfinding methods outlook¶

Newton methods are immensely successful

Convergence theory is local; we need good initial guesses (activity)

Computing the derivative \(f'(x)\) is intrusive

Avoided by secant methods (approximate the derivative; activity)

Algorithmic or numerical differentiation (future topics)

Bisection is robust when conditions are met

Line search (activity)

When does Newton diverge?

More topics

Find all the roots

Use Newton-type methods with bounds

Times when Newton converges slowly

Exploratory rootfinding¶

Find a function \(f(x)\) that models something you’re interested in. You could consider nonlinear physical models (aerodynamic drag, nonlinear elasticity), behavioral models, probability distributions, or anything else that that catches your interest. Implement the function in Julia or another language.

Consider how you might know the output of such functions, but not an input. Think from the position of different stakeholders: is the equation used differently by an experimentalist collecting data versus by someone making predictions through simulation? How about a company or government reasoning about people versus the people their decisions may impact?

Formulate the map from known to desired data as a rootfinding problem and try one or more methods (Newton, bisection, etc., or use a rootfinding library).

Plot the inverse function (output versus input) from the standpoint of one or more stakeholder. Are there interesting inflection points? Are the methods reliable?

If there are a hierarchy of models for the application you’re interested in, consider using a simpler model to provide an initial guess to a more complicated model.

Equation of state example¶

Consider an equation of state for a real gas, which might provide pressure \(p(T, \rho)\) as a function of temperature \(T\) and density \(\rho = 1/v\).

An experimentalist can measure temperature and pressure, and will need to solve for density (which is difficult to measure directly).

A simulation might know (at each cell or mesh point, at each time step) the density and internal energy, and need to compute pressure (and maybe temperature).

An analyst might have access to simulation output and wish to compute entropy (a thermodynamic property whose change reflects irreversible processes, and can be used to assess accuracy/stability of a simulation or efficiency of a machine).

The above highlights how many equations are incomplete, failing to model how related quantities (internal energy and entropy in this case) depend on the other quantities. Standardization bodies (such as NIST, here in Boulder) and practitioners often prefer models that intrinsically provide a complete set of consistent relations. An elegent methodology for equations of state for gasses and fluids is by way of the Helmholtz free energy, which is not observable, but whose partial derivatives define a complete set of thermodynamic properties. The CoolProp software has highly accurate models for many gasses, and practitioners often build less expensive models for narrower ranges of theromdynamic conditions.

Roots with multiplicity¶

There are multiple ways to represent (monic) polynomials

poly_eval_prod(x, a) = prod(x .- a)

function poly_eval_sum(x, b)

sum = 1

for c in b

# This is known as Horner's rule

sum = x * sum + c

end

sum

end

eps = 1e-10

a = [1e5, 1e5*(1)] # tiny perturbation to root

b = [-(a[1] + a[2])*(1), # tiny perturbation to monomial coefficent

a[1]*a[2]]

f(x) = poly_eval_prod(x, a)

g(x) = poly_eval_sum(x, b)

g (generic function with 1 method)

plot([f, g], xlims=(a[1]-2, a[2]+2), ylims=(-3, 3))

plot!(zero, color=:black, label=:none)

Perturbing the coefficient in the tenth digit made the difference between one root and two.

The distance between the roots was big when \(b_1\) was perturbed.

How did the roots move?¶

Take two roots at \(x=a\) and perturb the middle coefficient.

Analytically, we know the roots are at

plot([x->x, sqrt], xlims=(0, .1))

Note that condition number is well behaved.

What does Newton find?¶

hist = newton_hist(g, x -> 2*x + b[1], 1.1e5; tol=1e-14)

25×3 Matrix{Float64}:

110000.0 1.0e8 20000.0

105000.0 2.5e7 10000.0

102500.0 6.25e6 5000.0

101250.0 1.5625e6 2500.0

100625.0 390625.0 1250.0

1.00312e5 97656.2 625.0

1.00156e5 24414.1 312.5

1.00078e5 6103.52 156.25

1.00039e5 1525.88 78.125

1.0002e5 381.47 39.0625

1.0001e5 95.3674 19.5312

1.00005e5 23.8419 9.76562

1.00002e5 5.96046 4.88281

1.00001e5 1.49012 2.44141

1.00001e5 0.372528 1.2207

1.0e5 0.093132 0.610353

1.0e5 0.023283 0.305179

1.0e5 0.00582123 0.152593

100000.0 0.00145531 0.0762955

100000.0 0.000364304 0.0381463

100000.0 9.15527e-5 0.0190459

100000.0 2.28882e-5 0.00943207

100000.0 5.72205e-6 0.0045788

100000.0 1.90735e-6 0.00207943

100000.0 0.0 0.000244935

plot(hist[:,1], hist[:,2], seriestype=:path, marker=:auto)

plot!(g, title="Root $(hist[end,1])", alpha=.5)

plot!(zero, color=:black, label=:none)

Convergence is kinda slow.

The solution is not nearly as accurate as machine precision.

Using Polynomials¶

Julia has a nice package to evaluate and manipulate polynomials. The coefficients are given in the other order \(b_0 + b_1 x + b_2 x^2 + \dotsb\).

using Polynomials

@show fpoly = Polynomial([2, -3, 1])

@show gpoly = fromroots([1, 2] .+ 1e5)

derivative(gpoly)

fpoly = Polynomial([2, -3, 1]) = Polynomial(2 - 3*x + x^2)

gpoly = fromroots([1, 2] .+ 100000.0) = Polynomial(1.0000300002e10 - 200003.0*x + 1.0*x^2)

newton_hist(gpoly, derivative(gpoly), 3)

23×3 Matrix{Float64}:

3.0 9.9997e9 -199997.0

50002.2 2.49993e9 -99998.5

75001.9 6.24981e8 -49999.3

87501.7 1.56245e8 -24999.6

93751.6 3.90613e7 -12499.8

96876.5 9.76533e6 -6249.91

98439.0 2.44133e6 -3124.95

99220.3 6.10333e5 -1562.48

99610.9 1.52583e5 -781.239

99806.2 38145.7 -390.62

99903.8 9536.37 -195.311

99952.7 2384.03 -97.6582

99977.1 595.945 -48.8342

99989.3 148.924 -24.4273

99995.4 37.1686 -12.2341

99998.4 9.23006 -6.15794

99999.9 2.24666 -3.16017

1.00001e5 0.505424 -1.7383

1.00001e5 0.0845398 -1.15679

100001.0 0.0053409 -1.01063

100001.0 2.79285e-5 -1.00006

100001.0 7.85803e-10 -1.0

100001.0 0.0 -1.0

Finding all roots of polynomials¶

roots(Polynomial([1e10, -2e5*(1 + 1e-10), 1]))

2-element Vector{Float64}:

99998.58579643762

100001.41422356237

p = fromroots([0., 1., 2, 3] .+ 1e3)

xs = roots(p)

scatter(real(xs), imag(xs), color=:red)

for i in 1:100

r = randn(length(p)) # normally distributed mean 0, stddev 1

q = copy(p)

q[:] .*= 1 .+ 1e-10 * r

xs = roots(q)

scatter!(real(xs), imag(xs), markersize=1)

end

plot!(legend=:none)

Fundamental Theorem of Algebra¶

Every non-zero, single-variable, degree \(n\) polynomial with complex coefficients has, counted with multiplicity, exactly \(n\) complex roots.

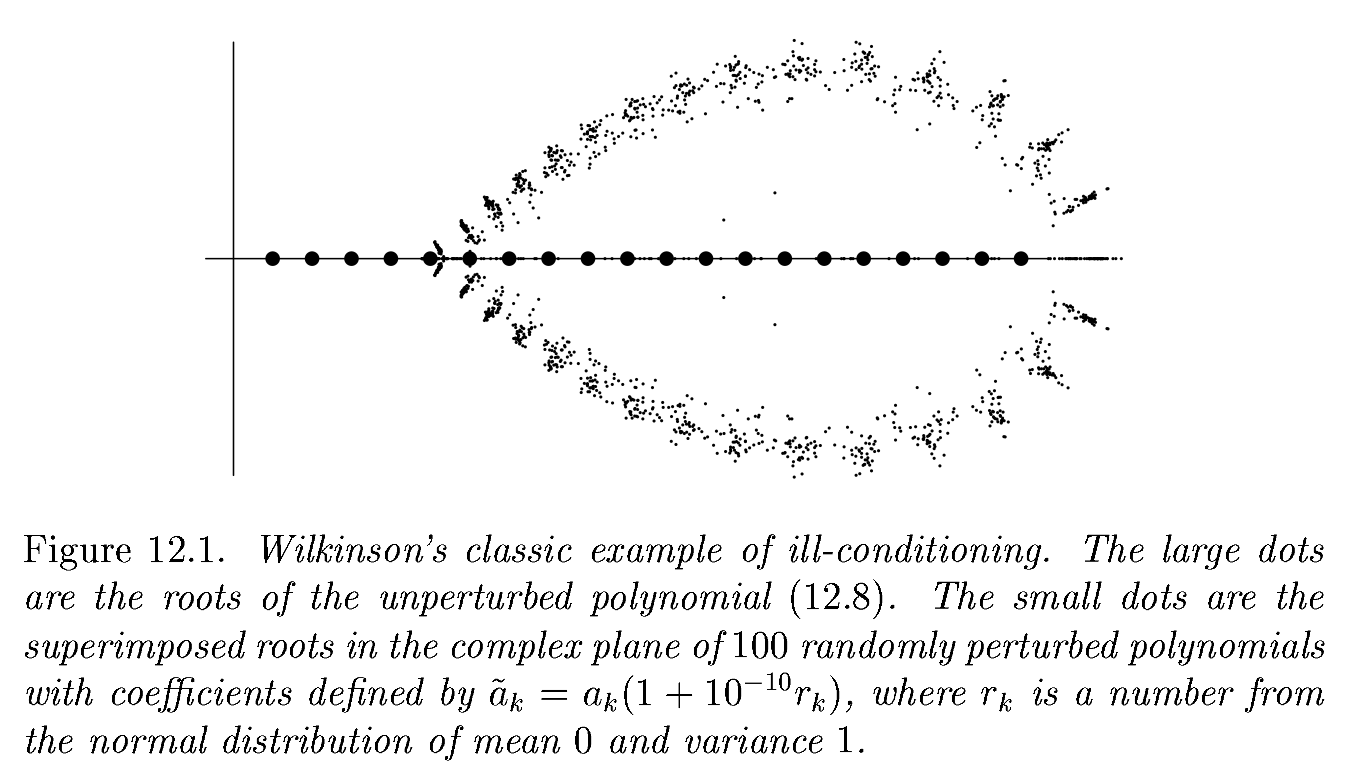

Wilkinson’s polynomial¶

Suppose we add more terms

n = 20

a = collect(1.:n)

w = fromroots(a)

w[10] *= 1 + 1e-12

plot(x -> abs(w(x)), xlims=(0, n+1), yscale=:log10)

w = fromroots(a)

scatter(a, zero(a), color=:red)

for i in 1:100

r = randn(length(w))

q = copy(w)

q[:] .*= 1 .+ 1e-10 * r

xs = roots(q)

scatter!(real(xs), imag(xs), markersize=1)

end

plot!(legend=:none)

Which is better to model inputs to a rootfinder?¶

A: coefficients \(a_k\) in

\[p(x) = \prod_k (x - a_k)\]

B: coefficients \(b_k\) in

\[p(x) = \sum_k b_k x^k\]

Figure from Trefethen and Bau (1999)¶

Forward vs backward error and stability¶

Stability¶

“nearly the right answer to nearly the right question”

Backward Stability¶

“exactly the right answer to nearly the right question”

Every backward stable algorithm is stable.

Not every stable algorithm is backward stable.

Map angle to the unit circle¶

theta = 1 .+ LinRange(0, 3, 100)

scatter(cos.(theta), sin.(theta), legend=:none, aspect_ratio=:equal)

theta = LinRange(1., 1+2e-5, 100)

mysin(t) = cos(t - (1e10+1)*pi/2)

plot(cos.(theta), sin.(theta), aspect_ratio=:equal)

scatter!(cos.(theta), mysin.(theta))

Are we observing A=stabiltiy, B=backward stability, or C=neither?¶

The numbers \((\widetilde\cos \theta, \widetilde\sin \theta = (\operatorname{fl}(\cos \theta), \operatorname{fl}(\sin\theta))\) do not lie exactly on the unit circles.

There does not exist a \(\tilde\theta\) such that \((\widetilde\cos \theta, \widetilde \sin\theta) = (\cos\tilde\theta, \sin\tilde\theta)\)

Accuracy of backward stable algorithms (Theorem)¶

A backward stable algorithm for computing \(f(x)\) has relative accuracy

In practice, it is rarely possible for a function to be backward stable when the output space is higher dimensional than the input space.